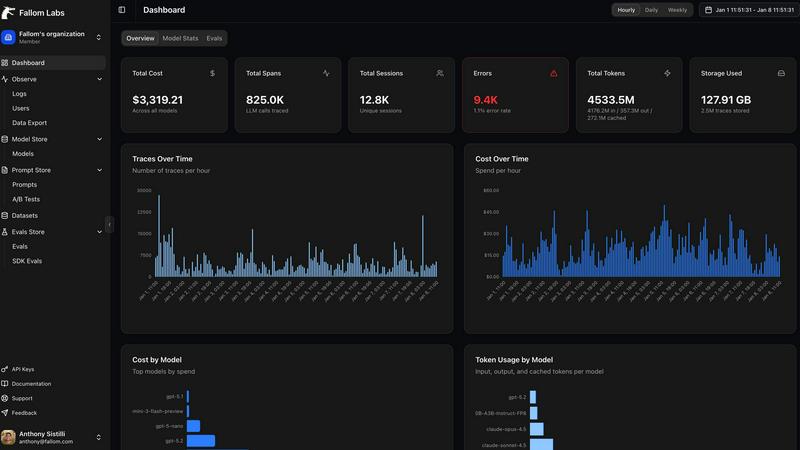

Fallom

Fallom offers real-time observability for LLMs, enabling efficient tracking, debugging, and cost management of AI age...

Visit

Fallom is an AI-native observability platform designed specifically for monitoring and optimizing large language model (LLM) and agent workloads. It provides organizations with unparalleled visibility into every LLM call in production, offering end-to-end tracing that encompasses prompts, outputs, tool calls, tokens, latency, and the cost associated with each interaction. This level of detail is crucial for developers, data scientists, and operational teams who require real-time insights to understand LLM performance and troubleshoot issues effectively. Fallom enhances compliance with enterprise-ready features such as session/user/customer-level context, timing waterfalls for multi-step agents, and comprehensive audit trails that include logging, model versioning, and consent tracking. By utilizing a single OpenTelemetry-native SDK, teams can set up monitoring in minutes, gaining the ability to live monitor usage, debug issues quickly, and allocate spending accurately across models, users, and teams.

You may also like:

Pentest.fyi

Easily find and connect with top penetration testing companies worldwide to meet your specific security needs.

Blueberry

Blueberry is an all-in-one Mac app that streamlines web app development by integrating your editor, terminal, and.

Anti Tempmail

Anti TempMail is an email verification API that enhances growth and risk management with accurate, explainable.