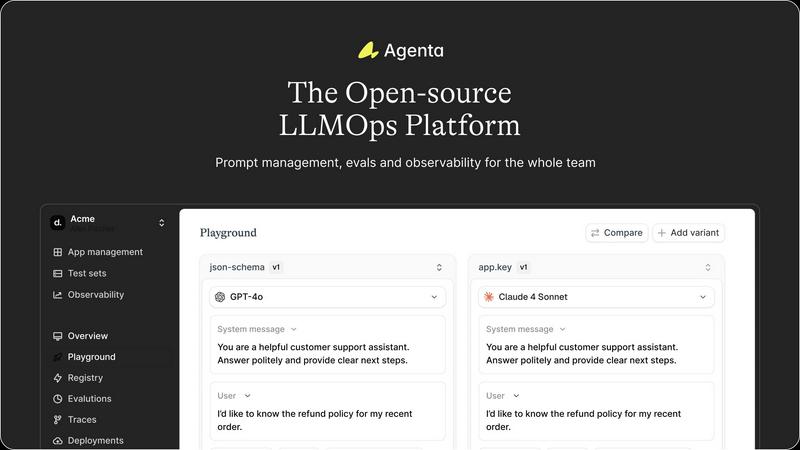

About Agenta

Agenta is an open-source LLMOps platform designed to solve the core challenges AI teams face when building and shipping reliable LLM applications. It acts as a centralized hub where developers, product managers, and subject matter experts can collaborate to move from chaotic, siloed workflows to structured, evidence-based processes. The platform directly addresses the unpredictable nature of LLMs by providing integrated tools for the entire development lifecycle. Teams use Agenta to experiment with different prompts and models in a unified playground, run systematic automated and human evaluations to validate changes, and observe production systems with detailed tracing to quickly debug issues. Its core value proposition is replacing guesswork and scattered tools with a single source of truth, enabling teams to iterate faster, deploy with confidence, and maintain performance over time. By being open-source and model-agnostic, Agenta offers flexibility and avoids vendor lock-in, making it a practical foundation for any team serious about operationalizing their LLM applications.

Features of Agenta

Unified Experimentation Playground

Agenta provides a central playground where teams can experiment with and compare different prompts, parameters, and foundation models side-by-side. This eliminates the need to scatter prompts across emails and documents. Every iteration is automatically versioned, creating a complete history of changes. Crucially, you can debug using real production data, allowing you to replay errors and test fixes in a controlled environment before redeploying.

Automated and Flexible Evaluation

Replace subjective "vibe checks" with evidence-based validation. Agenta allows you to create a systematic evaluation process using a variety of evaluators. You can leverage LLM-as-a-judge, use built-in metrics, or integrate your own custom code. It supports evaluating full agentic traces, not just final outputs, and seamlessly incorporates human feedback from domain experts into the evaluation workflow for comprehensive testing.

Production Observability & Tracing

Gain deep visibility into your live LLM applications. Agenta traces every request, allowing you to pinpoint the exact failure points in complex chains or agentic workflows. Any production trace can be instantly turned into a test case with one click, closing the feedback loop. The platform also supports monitoring performance with live evaluations to detect regressions and annotate traces collaboratively with your team or end-users.

Collaborative Workflow for Cross-Functional Teams

Agenta breaks down silos by bringing product managers, developers, and domain experts into a single workflow. It provides a safe, no-code UI for experts to edit and experiment with prompts. Product managers can run evaluations and compare experiments directly from the interface. This collaboration is backed by full parity between the UI and API, ensuring both programmatic and manual workflows integrate into one central hub.

Use Cases of Agenta

Streamlining Prompt Engineering and Management

Teams can centralize their prompt development, moving away from disjointed spreadsheets and chat threads. Using the unified playground, they can rapidly prototype, A/B test different prompt strategies, and maintain a versioned history of what works. This creates a reproducible and auditable process for improving prompt performance before any code is deployed.

Validating Changes Before Deployment

Before shipping an update to a production LLM application, teams can use Agenta to run comprehensive evaluations. They can test new prompts or models against a curated set of test cases, using automated judges and human feedback to gather concrete evidence of improvement or regression, ensuring only validated changes are promoted.

Debugging Complex Production Issues

When an AI agent behaves unexpectedly in production, engineers can use Agenta's observability tools to trace the exact request. They can inspect each step in an agent's reasoning, identify where it went wrong, save that problematic trace as a test case, and iterate on a fix in the playground—transforming debugging from guesswork into a structured process.

Enabling Domain Expert Collaboration

Non-technical subject matter experts (e.g., in legal, customer support, or marketing) can be directly involved in refining AI behavior. Through Agenta's UI, they can safely adjust prompts, provide feedback on outputs, and participate in evaluation processes, ensuring the final application aligns with domain-specific knowledge and requirements without requiring engineering intervention.

Frequently Asked Questions

Is Agenta really open-source?

Yes, Agenta is a fully open-source platform. You can view the source code on GitHub, contribute to the project, and self-host the entire platform. This provides transparency, allows for customization, and ensures you are not locked into a specific vendor's ecosystem or pricing model.

What LLM frameworks and providers does Agenta support?

Agenta is designed to be model-agnostic and framework-friendly. It seamlessly integrates with popular frameworks like LangChain and LlamaIndex and works with any model provider, including OpenAI, Anthropic, Google, and open-source models via APIs like Ollama or vLLM. You can compare models from different providers within the same playground.

How does Agenta handle evaluation and testing?

Agenta provides a flexible evaluation system. You can create test datasets and use various evaluators: LLM-as-a-judge (using one LLM to grade another), built-in metrics (e.g., correctness, hallucination), or your own custom Python code. It also supports human-in-the-loop evaluation, allowing domain experts to label and score outputs directly in the platform.

Can Agenta be used for monitoring live applications?

Absolutely. Agenta's observability features are built for production. It can trace all requests to your LLM application, giving you insights into latency, costs, and intermediate steps. You can set up live evaluations to continuously monitor for performance regressions and instantly convert any problematic trace into a test case for debugging and future prevention.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs